Over the past few years, there has been a rapid rise of digitization and significant advancements in high-end ‘Hollywood style’ production technology. These developments have unveiled numerous opportunities for organizations in the entertainment industry, enabling them to make improvements in efficiency, costs, and resources.

This ‘Hollywood style’ production technology is now so advanced, portable, and intuitive that an experienced solo content producer, with the right tools, can produce consumer-ready content without a full production team of the same (if not better!) quality.

Through combining expertise from the gaming industry, visual effects and premium motion tracking technology the future potential of content and immersive entertainment experiences seems limitless. If we can dream it, the chances are we’ll eventually be able to do it.

The challenge

As a full-stack visual effect and virtual production studio, specializing in innovative visualization solutions, Area of Effect (AOE) was approached by Loud Robot, a joint venture record label between RCA Records and Bad Robot.

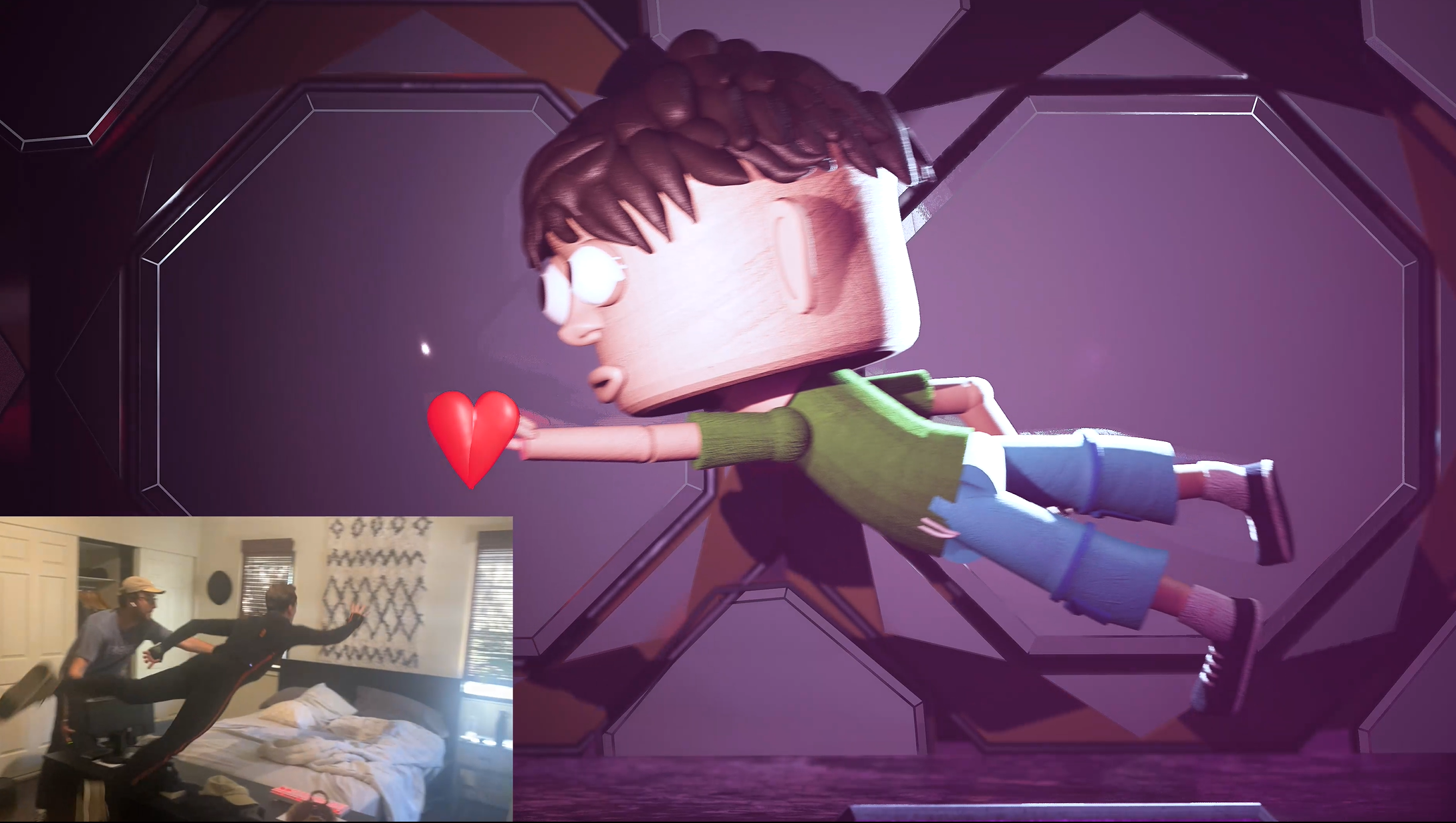

The project involved creating a music video for Sally Boy’s new single, i Love U, with a unique challenge: can one individual with the right technical ability, create a music video in-engine, using high-end modern tools such as Xsens MVN Animate, Faceware, and Unreal Engine?

Diving in at the deep end

Bryce Cohen, Real-Time VFX Associate at AOE was tasked with discovering just how much an individual was able to do to create a music video in Unreal Engine, from the very initial conceptual stages to final editing and production. The project gave him the opportunity to showcase his impressive technical abilities by utilizing cutting-edge Hollywood-style technology.

The first stage was discovering what technology needed to be used to create the desired end result. For example, the video was going to be based around a character, who would be talking and moving their mouth to react, so face tracking was a must. The character was also going to be moving around a lot, so camera tracking was required. In the end, a decision was made to use all available technology and really push the boundaries of what was possible through motion capture.

“When I was first approached about this project, I was nervous about the entire thing since it was nothing but variables,” said Cohen. “Creatives with the right technical abilities have a whole new world of virtual production available to them through utilizing new and improved technology such as mocap.”

Lights, camera, action!

A lot of the technology used within the video was brand new to Cohen and he only had hours to familiarize himself with how to use it.

“It’s so user intuitive and straightforward, with all the brilliant tutorials available online, you’re able to learn solo in-studio,” said Cohen. “However, it’s important to note that due to the cost and level of skill required, these are not consumer or hobbyist tools.

“The production of this project was full of little moments of discovery. My favorite of which was trying to figure out how to animate butterflies to bring more life to one of the scenes. The solution? Taping a camera tracker to the director, Jake Zaoutis’ head and having him run around my garage, acting like a butterfly.”

A key piece of high-end technology that made the whole video production possible was the Xsens suit. This enabled real-time referencing of the video shots for a quick production pipeline.

The Xsens MVN Animate suit provides accurate, production-quality results, similar to what an optical system can provide results every time, from anywhere in the world. This boundary-pushing technology provides clean, production-ready data from both inside and outside a studio. Cohen found the collection of real data to be crucial to the success of the video.

Cohen said: “That was another bonus in terms of the way the suit itself was structured. At no point did we worry about breaking the suit or find it limiting—at times, you forgot you were wearing it!

Making the impossible, possible

In Cohen’s opinion, there were two main challenges to overcome. The first was a software problem and the second was a more general one, regarding ‘how to work’.

For the software problem, Cohen needed to build a custom facial rig for the character. This was a very difficult process that took trial and error to get things right, but it became easier after discovering and using the tool in practice.

For the ‘how to work’ challenge, the main issue was translating creative direction from Zaoutis, who’s never worked within an environment such as the Unreal Engine before, into actions, processes and outcomes. Cohen found he was responsible for not only solving the problem but explaining the reasoning behind whether or not it was an idea to move forward with, predominantly due to time constraints and resource dedication. Compromise was key.

There were many scenes within the final video that wouldn’t have been possible to create without the use of mocap, specifically the Xsens suit. For example, when the character is running down a street, the graphics and motion were so realistic, the team couldn’t have looped it as a running animation and also didn’t have a treadmill standing by. It wouldn’t have been possible to do this with markerless mocap as the camera field of view would have been too wide and there were no other suitable alternatives.

With the intuitiveness of the technology, Cohen had as much control and customizability as possible in order to make it feel like a ‘real’ production. This resulted in the movements recorded being tailored to the exact shot required for the video, with no authenticity lost by automating parts.

“When you’re trying to make something real in a digital space, you need everything that’s being put into that space to be real. It's the imperfections and happy accidents that make things feel real.” Cohen explained.

In terms of preparation, the artist and face behind the character, Sally Boy, had none. He was shown the character for approval and then arrived in the studio (garage), put on the Xsens suit and had a list of over 100 different physical movements to do. Those actions were then applied to the character.

The final music video was an extension of Sally Boy’s art and a reflection of the emotions in his music, not just a piece of marketing material.

The final music video was an extension of Sally Boy’s art and a reflection of the emotions in his music, not just a piece of marketing material.

This technology has the potential to bridge the gap between the gaming and film industries. When they start to work hand in hand, individuals can produce, as Cohen said, “some really exciting stuff.”

About AOE

Area of Effects is a full-stack visual effects and virtual production studio specializing in innovative visualization solutions for feature films, television, and commercial production.

Based in Los Angeles, New Orleans, and Honolulu, our academy award-winning crews aim to change the way content creators think about the entire pre and post-production processes. We work with some of the biggest names in film, television, and gaming in order to achieve and drive our creative and technical goals, including the multi-Academy Award Winning VFX Supervisor Rob Legato.

Xsens MVN Animate

Actor Capture used Xsens MVN Animate as their solution. It enables you to mocap anywhere, at any time. Want to know what we can do for you? Get in touch!

Experience the quality of Xsens' Motion Capture data

Are you actively looking for a motion capture system and want to compare data? Download Xsens motion capture data files to convince you about the quality of our data.