First developed by Ubisoft, Motion Matching is an alternative to the state machine method used to animate in-game characters. Instead of mapping out every animation state in a sequence – including all transitions – and then placing them manually, Motion Matching is an algorithm that plots the future trajectory of the animated character. The software can then match the correct animation with the movement input from the user. This, in turn, substantially reduces the amount of time needed to animate a sequence.

While the technology is not yet the industry standard for game development, both indie studios and triple-A developers have already successfully applied Motion Matching techniques, with well-known examples of its application being The Last of Us 2, Ghost Recon, For Honor, and various EA Sports titles.

Kenneth Claassen has spent several years perfecting Motion Matching tools within the Unity game engine, demonstrating the breadth of possibilities available for game developers, and delivering one of the only public Motion Matching tools accessible. Utilizing the full extent of Motion Matching’s capabilities requires recording accurate full-body motion. Earlier this year he got in contact with our Australian partner Tracklab and entered the Indie program supporting up-and-coming talent in making it easier to access Xsens motion capture on a budget. We spoke with Kenneth to discover how he uses Xsens MVN Animate to achieve just that. Find out more about Motion Matching for Unity.

From engineering to coding

Ken originally spent eight years as a Charted Civil Engineer. But his true passion and ambition lay in the world of game development and he continued experimenting with game engine coding during his free time.

“I really wanted to change career to game development!” Exclaimed Ken.

“I was working in Unity and coding with other game engines for around six or seven years and saw a Motion Matching presentation with Ubisoft and thought ‘yeah, I can do that,” continued Ken.

“It took some learning, but a few years later I’ve managed to release Motion Matching for Unity, and it works pretty well. Since then, I’ve managed to secure an Epic-games mega grant to port my ideas to Unreal too, and of course, I recently acquired MVN Animate with Awinda hardware,” said Ken.

Ken plans and records the entirety of his animation on his own – whether it’s a jump, sprint, or turn, it’s Ken in the Xsens suit. But before beginning his solo venture, Ken did gain some experience with a team using Optical camera-based mocap but found he could never achieve the accuracy he’s obtaining now.

“There’s ultimate accuracy with optics if you have a fancy studio, but for us, in a smaller studio, we found the results to be lacking. The capture size was just so small it was really hard to capture running and sprinting. It also took us weeks to clean up, not to mention the 3-5k costs to rent out the studio for the day,” said Ken.

“Once I got Xsens, within 2 weeks I could capture all the data I needed and the automatic reprocessing negates cleanup. My experience has been fantastic to be honest,” continued Ken.

The process

Every animation session follows the same process. Planning, recording, and application make up the key components of Ken’s typical day.

“When it comes to recording, I start with the design of the mocap takes. It really helps if you design the moves a certain way ahead of time. If I was recording running I’d record at 45 degrees, 90 degrees, and eventually, all the way around. And I’ll fit that into one long take and make sure there’s one second in between each movement,” explained Ken.

“The reason there’s one second in-between is that Motion Matching requires a trajectory, and you need to be able to acquire that trajectory from the data,” continued Ken.

The versatility of Xsens makes recording all movements incredibly simple and Ken takes full advantage of his surroundings when carrying out acting.

“I’ll either record in my garage or if I need to record sprinting I’ll set up somewhere else. I’ve recorded in parks, hired churches, pretty much anywhere I can go. It’s really easy to do that,” said Ken.

“Once I’ve completed my takes, I’ll process the animation in Blender. That’s the point where I complete some retargeting and add in some basic hand poses. I also make sure the animation root doesn’t waggle and I smoothen out the turns,” said Ken.

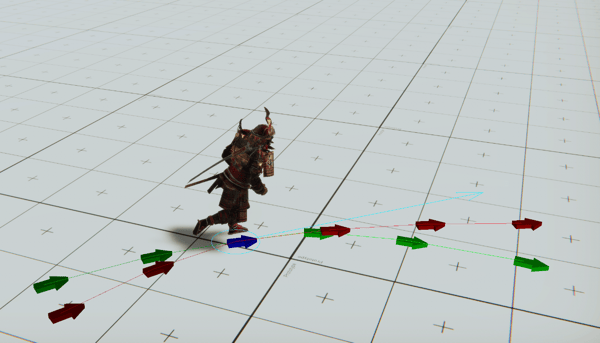

“I use it in Unity with my Motion Matching plugin, you can choose all the animations you want and click process. From there you can control the character – it’s got all the movements already in it. It’s even got counter turns. If a player turns left but then changes his mind to turn right, the engine will animate it which is extremely difficult to do without Motion Matching,” explained Ken.

“If you’ve got good data – and Xsens is certainly the best way to get it – 90% of your character animation is already done,” continued Ken.

The benefits of Motion Matching

The improvements Motion Matching brings over state machines is vast, cutting down animation time and ultimately enhancing the process.

“(In a state machine) If you wanted to make something with starts and stops, as opposed to just blending from an idle to a run, you’re going to need tons of animated states. You have to figure out all the transitions and what triggers each of them, and when it comes to human motion, that can be extremely complicated,” explained Ken.

“Teams can spend months figuring this stuff out. Motion Matching automatically finds another pose that’s similar and takes you to where you want to go,” continued Ken.

“This is part of a demo that I want to release that’s playable. So without having to buy the asset, you can try it out – you can run around and see how it feels.”

Motion Symphony for Unreal

While Ken has continued to expand upon his Unity demo and tools, he has also been working hard on creating the same experience within the Unreal engine too. He calls this particular project ‘Motion Symphony.’

“It’s essentially a port of Motion Matching for Unity with some slight differences. The Unreal character animation is actually more flexible. I can have the same continuous Motion Matching as I had in Unity, but can also factor in some elements from the state machine too. I can add in simplified states so that when you transfer into the pose it picks the designated animation,” explained Ken.

“You can effectively have the best of both worlds,” continued Ken.

Community

Lastly, one of the most exciting parts of developing Motion Matching is the online community being built around it. Whether it’s sharing ideas through Discord or providing animations for other game developers to work with, the range of possibilities Motion Matching offers is ever-expanding.

“One of the things that people lack the most is having the animation data. I’ve already released the data that I’ve captured with Xsens so it can be used in the demo scenes. Hopefully, that kind of animation becomes more common so people can Motion Match now,” said Ken.

“I have about 500 people that are all customers in a Discord channel. A fair few of them are using Xsens Link and MTw Awinda, there are some sports games being made and more. There’s loads of knowledge about Motion Matching being shared,” said Ken.

You can view Ken’s breakdown of Motion Matching here.

MTw Awinda

For more information about the MTw Awinda, click the button below.