The Virtual YouTubers (or Vtuber) have taken the internet by storm. Virtual YouTuber is here to stay after becoming a trend in Japan beginning of 2018. There are many of them with a big following, some of them have millions of subscribers, making them very interesting for merchandise such as nendroids, official promotional material, and collaborations with other YouTubers.

But what is the technology behind these virtual anime girls?

The setup of a Virtual YouTuber mostly involves facial recognition, gesture recognition and animation software. Combining these technologies can be tricky. The best-known issue with this technology is the revealing of Noracat true identity in a live broadcast.

Well-known companies in the Virtual YouTuber space like Cygames and CAPTUREROID use this typical Vtuber setup. In this blog, we will describe their Perfect Virtual YouTuber setup.

One of the most famous Vtuber at the moment is Kizuna Ai, with millions of subscribers to her channel. She is also the spokeswoman for the Japan National Tourism Organization. English-speaking Vtbers like Codemiko are also coming up.

The Perfect Virtual YouTuber Setup:

1. 3D Avatar

First of all, you need an Vtuber avatar and this sounds easier than it actually is. A full-body avatar needs to act and move naturally and having a unique avatar is not easy to make. It needs to be fully 'rigged' before it can move in a natural way. The easiest way to start is to download a model from pages like TurboSquid, Sketchfab or CGTrader.

Unreal Engine also released Meta Human Creator to create high-fidelity digital humans. There are also options to work with apps like ReadyPlayerMe or Wolf3D.

2. 3D animation software

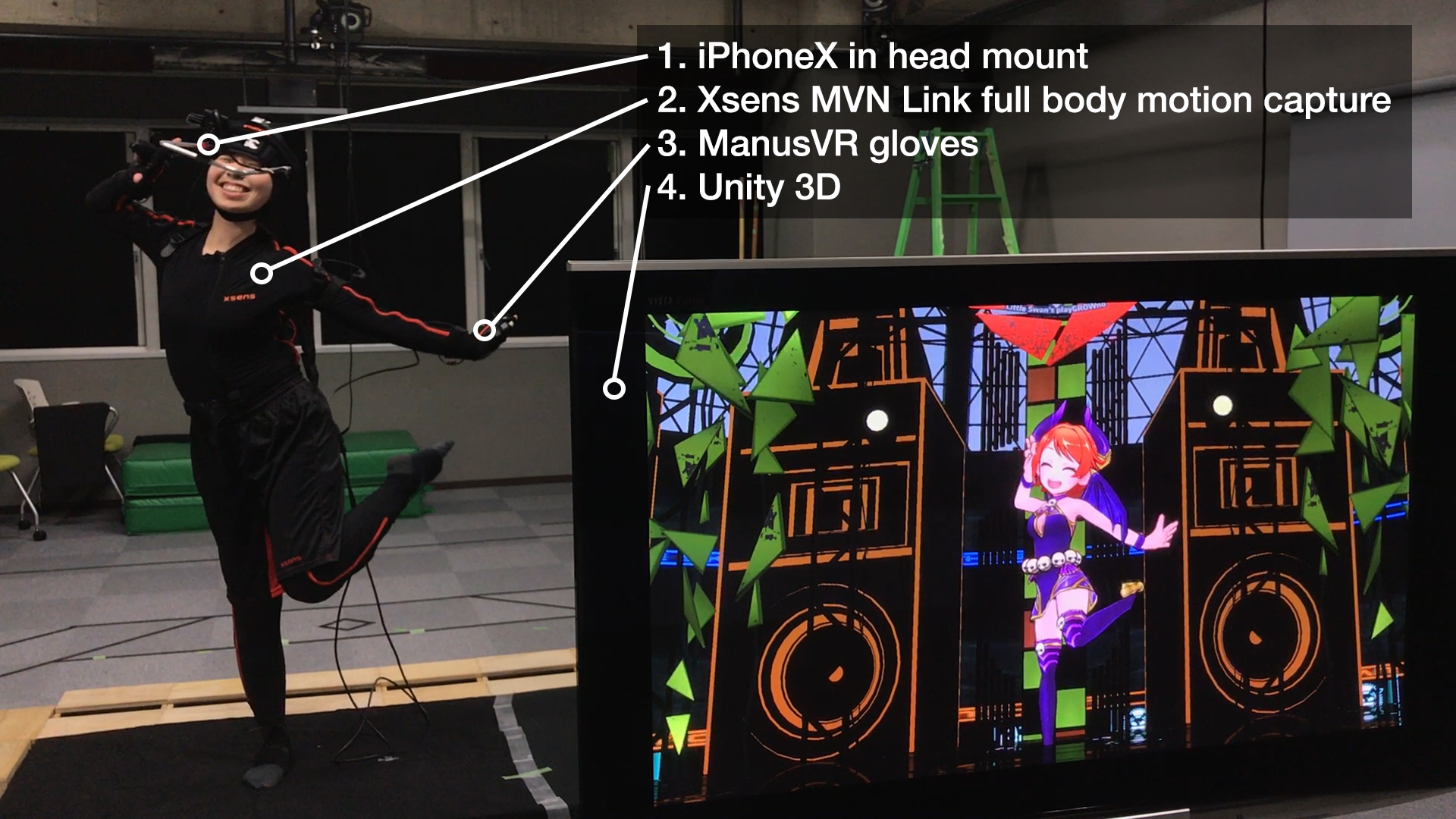

To pull it all together you need 3D animation software such as Unreal, Unity 3D or iClone. It is really easy to stream Xsens' motion capture data into all major 3D animation software packages, you can do that either natively or via a plug-in. An overview of the Xsens integrations can be found here.

3. The full-body Xsens motion capture system

In this setup, you see the full-body motion capture system from Xsens, including the 'MVN Link' hardware (suit). The live motion capture data can be streamed into Unity using Xsens' MVN Animate software to give you the best live quality data you can get.

Many Vtubers use the MVN Awinda Starter in combination with the MVN Animate Plus software for live streaming. Want to know what we can do for you? Get in touch!

If you are actively looking for a motion capture system and want to compare data? You can also download Xsens motion capture files here.

4. An iPhoneX with face recognition software attached to a head mount

There are several resources online on how to get facial data into Unity, iClone or Maya. We have used the Live Link Face app ourselves and had great results.

There are also high-end solutions such as Faceware.

5. Gloves

The Xsens Gloves by Manus finger tracking data in integrated in MVN Animate and can be streamed into Unity or Unreal. Same counts for the StretchSense gloves.

There is of course more technology available to get your YouTuber avatar live on screen, the Vtuber market is developing rapidly. Xsens motion capture technology has already been a proven technology for many years and has a long track record in live and streaming animations.

Xsens has many Vtuber users and to give you an example of what they do, check out these stories:

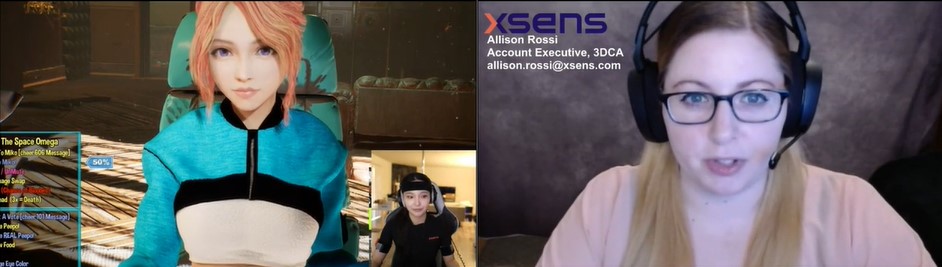

Code Miko setup with Live Link in Unreal Engine

Codemiko is a Vtuber mostly active on Twitch, go check her out here. She has shared many videos showing her full tech setup.

Also, check out the 'How to become a Vtuber' webinar we did with her at the bottom of this page.

Here are some examples of Vtuber style videos:

Cory Strassburger with an iPhone 12, Unreal Engine, and Xsens setup

Cory's new character Blu uses a setup with Xsens mocap in tandem with an iPhone 12, streamed via Unreal Engine. You can see his setup at the end in the video below.

One Piece Vtuber

One Piece voice actors Mayumi Tanaka and Kappei Yamagushi are in the Xsens MVN motion capture system doing a live Vtuber show as their characters Luffy and Usopp.

Webinar: How to become a Vtuber

Want to become one of them? We have a perfect webinar for you! During our Mocap Content Creator Conference (MCCC) we talked to CodeMiko about how she became a Vtuber. This webinar will give you insights into her journey to become a Vtuber including how to utilize Xsens motion capture.